How a Client Fine-Tuned Attribution Models with Incrementality

March 20, 2020

Incrementality measurement is not a new concept in marketing, but a quick Google search shows that it resurfaced as a trend topic in 2018 and 2019 with new articles ranging from best practices to different testing methodologies. Today, it’s no longer just a nice-to-have but has become a necessity in every mobile marketing and growth strategy. A scientifically-based solution for measurement, measuring incremental uplift is a must for marketers who want to understand the real impact of their advertising efforts.

But how exactly does incrementality go hand-in-hand with mobile attribution and its widely accepted best practices? A Remerge client’s approach to incrementality measurement as a way to continuously validate their attribution models has proven success.

Attribution

In mobile marketing, various attribution models exist as a way to identify and give credit for ad conversions to the right traction channels. Some of the recognized models include first-touch, last-touch, multi-touch, and view-through-attribution (VTA). The naming conventions are based on how the conversion is credited. For example: last-click attribution gives all the credit to the last touch point a user had before continuing on to a conversion, whereas multi-touch attribution puts different weights (all currently arbitrary as they differ from advertiser to advertiser) on all possible conversion touch points.

As to which model is the best for an app, it is currently a debatable topic. Overall, attribution is an established and conventional way for marketers to track ROIs as the practices are standardized across the industry. However, these models are not entirely accurate, which is where incrementality comes into play.

Incrementality

Incremental uplift measurement has become a new solution to attribution-based problems, as it stems from the need for better alignment. Due to its scientific nature, incrementality provides a clearer picture on the increase in sales caused by ads. Testing methodologies include creating control and test groups, similar to medical studies that test the efficacy of new drugs, where ads are only shown to the test group and the behavior is consequently monitored. The results are scientifically true, however, validating results takes time.

Nevertheless, the time invested in validating incremental uplift is still an improvement in overall measurement, as attribution windows are usually based on “what feels right”, whereas incrementality measurement follows a more objective approach. Relying on a more objective model means that organic conversions, conversions that would have happened without advertising anyway, are excluded from the results.

« The results are scientifically true, however, validating results takes time. »

But aren't incrementality and attribution two separate concepts?

While we previously wrote about how attribution and incrementality are completely independent concepts, we’ve seen cases of Remerge clients using incrementality measurement to calibrate their attribution settings and to validate that the models are as accurate as can be. So even if these measurement methodologies and their respective KPIs remain somewhat independent, the results can be compared to validate attribution models.

With regards to KPIs, attributed KPIs are more standardized and provide a common ground for all marketing activities, whereas incrementality methodologies are not standardized across partners. The key is to be critical of how incremental uplift is measured.

Case study: A different approach to incrementality

In this example, a Remerge client uses multi-touch attribution for their marketing channels weighing Remerge conversions at 50%. This means that 50% of the credit is given to Remerge for all conversions, a.k.a. 50 cents on the dollar.

The client wanted to verify whether they were over- or under-crediting Remerge (and incidentally their other marketing efforts or partners). Using the ghost bids methodology, they ran a 28-day uplift test and analyzed all conversions within the given time-frame. Both attributed KPIs and incremental KPIs were collected and compared against each other. The incremental conversions were then used as a guideline for adjusting attributed KPIs.

For example, in a 28-day period, 1000 conversions were attributed to Remerge via the client’s mobile measurement platform. This number is cross-checked with how many incremental conversions Remerge drove. There are three possible outcomes:

Incremental conversions < Attributed conversions

If for the same period, Remerge provided 500 incremental conversions (versus 1000 attributed conversions), the client can conclude that they were over-crediting us. The uplift test proves that the other 500 conversions are in fact not driven by Remerge ads (these are not necessarily a result of organic cannibalization, or attributed to us based on last-click but not incremental).

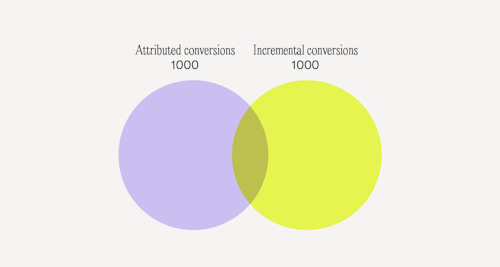

Incremental Conversions = Attributed Conversions

If the results show 1000 attributed conversions and 1000 incremental conversions, then it is safe to say that the measurement system is properly calibrated, even if it’s not exactly the same conversions.

A visual representation of conversions, where some attributed conversions are also incremental, while some are not, vice versa.

Incremental Conversions > Attributed conversions

On the other hand, if the client measures 2000 incremental conversions as opposed to 1000 attributed conversions, then they are tracking fewer conversions than the ones Remerge is actually delivering on top of everything else. In this scenario, the client is under-crediting their retargeting partner.

How to calibrate your attribution model and CPAs based on incremental conversion

Run the test for a period of time, measuring all incremental and attribution conversions in this period. You might want to run tests once every so often (once per quarter would be a good start) and reanalyze results as the findings are not the same each time. This is due to changing audiences, interests, trends, creatives, and so on.

For multi-touch models where incremental conversions are higher than attributed conversions, you’ll need to increase the weighted percentage driven by that channel. Conversely, you’ll want to decrease the percentage if your partner is being over-credited.

For last-click models, it means that targets need to be adapted. Imagine your target CPA is $10 and after spending 10K, you get 1000 conversions (1000 conversions x $10 = $10,000). This means you are on target. Your attribution model shows that each conversion costs $10.

Simultaneously running an uplift test shows that your partner actually delivered 2000 incremental conversions, not 1000. This means that the CPA was not $10 but in fact, $5. Consequently, the target needs to be adapted to $20 for attributed KPIs. If each attributed conversion is in fact corresponding to two incremental conversions, one should have a 2X dollars CPX target, because having a 2X attributed CPX means achieving $X in incremental CPX.

The same would work the other way around. If the partner only delivered 500 conversions, the attributed KPIs must be adjusted to $5 per conversion.

This setup allows you to see if you are tracking attributed KPIs in a way that reflects the incremental value of the campaigns. After that, you can say forever goodbye to paying double.

Wrapping Up

Based on the insights gathered through Remerge’s continuous incrementality tests, our client was able to calibrate their attribution model not only for Remerge but across all retargeting partners.

Incrementality measurement is a must-have for accurately measuring ROI, and for those who are not ready to make the full switch to incremental KPIs, using uplift test results to validate and regularly update attribution models is a big leap towards measuring the real impact of different marketing efforts.